Two of the many things I love about the ASP.Net Web API are:

- It produces human-readable output. This is immediately useful when creating a Web API-based (SOA) service—you don’t need to have a consuming application in place before you can start testing functionality.

- The ability to Self-Host. The Web API can be hosted in a process outside of IIS with very little setup or configuration. This is a fantastic option for services that don’t really lend themselves to running as a web application, for example services with no UI or processes that need to remain resident at all times (timers, messaging handlers, etc.). It’s also an easy way to expose functionality from a legacy application that runs in a Windows Service or console application.

On a recent project, my team was struggling to troubleshoot a problem we were having with a rather complex Windows Service. Worse, the problem we were having was showing up in the test environment, but appeared to be working flawlessly in the development and demo environments. Adding to our misery was the fact that the problem seemed to come up intermittently with no apparent rhyme or reason to when or why it happened. As any developer knows, the worst type of bug is one that you can’t reproduce easily.

The obvious approach would be to add logging around the key areas of the system where the problem would present itself. This was discussed, but because of the complexity of the system, putting logging around the problem would result in a massive number of writes to the log, and a very labor-intensive task to parse all that data.

What we needed was a mechanism to see the real-time, current state of certain parts of the application, and to see it on demand.

Simple Self-Hosted Web API Example

This is a technique that should (probably) be used sparingly, as I wouldn’t recommend exposing the state of all of your Windows Services via the Web API (or any other tool). With the ability to expose state externally, and in a human-readable form, there may come a temptation to allow this to bleed into the design of your application. This is similar in principle to making a private method (or property) public in the name of testability—the structure of your code/objects should reflect the domain you are trying to represent, and shouldn’t be released to production exposing state just for debugging/testing purposes.

With all those disclaimers out of the way, let’s take a look at our console app. I won’t show all the steps to setting up a new solution in Visual Studio, but will focus on the required bits to get the Self-Hosting up and running. The actual problem would be a bit much to explain, and coming up with a valid analogy is difficult, so I’m just going to use a simple example. I’m also going to use a console application instead of a Windows Service, as it works exactly the same way.

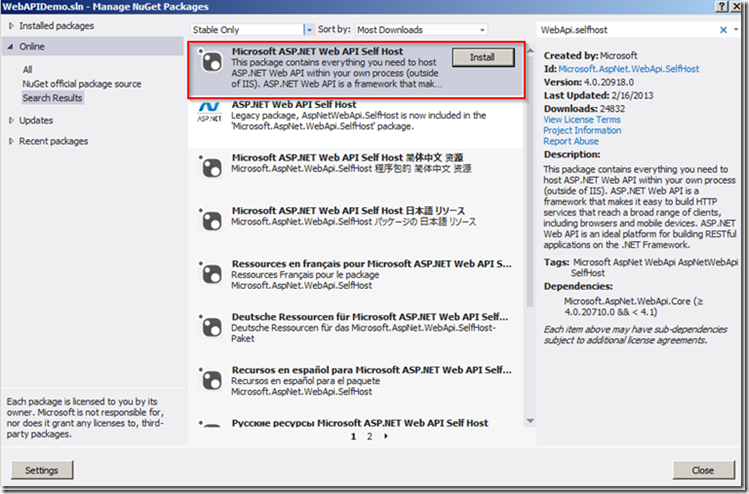

Add the Self-Hosted Web API assemblies to your project using Nuget. From the Library Package Manager, search online for WebApi.SelfHost, and install Microsoft ASP.NET Web API Self Host:

If you prefer to use the PowerShell console, use: Install-Package AspNetWebApi.SelfHost.

If this is successful, your project should should have a reference to the System.Web.Http.SelfHost library:

And now, the few lines of code to configure Self Hosted Web API:

using System; using System.Web.Http; using System.Web.Http.SelfHost; namespace WebAPIDemo { internal class Program { private static HttpSelfHostServer _server;

private static void Main(string[] args) { var config = new HttpSelfHostConfiguration("http://localhost:1108"); config.Routes.MapHttpRoute("DefaultApi", "api/{controller}/{id}", new {controller = "Home", id = RouteParameter.Optional}); _server = new HttpSelfHostServer(config); _server.OpenAsync().Wait();

Console.WriteLine("Press enter to exit"); Console.ReadLine(); } } }

For simplicity’s sake, I chose to configure this directly in the console application’s entry point, and keep the server in a static variable. Instead of using MapRoute to setup our routes (as we would in an MVC application), we use MapHttpRoute. We also add the path “api/” before the controller in our route—this helps the consumer differentiate between a call to an MVC controller (that renders HTML) and an API/service call (that returns data). This is, of course, only a convention, and we could make the path anything we choose.

As we create an instance of HttpSelfHostConfiguration, we need to specify the URL where the service will be listening for HTTP requests. The path is hard coded in this example, and would likely be loaded via a configuration file or some other means. Reserving a URL for listening to HTTP requests requires specific permissions, so if you are not running Visual Studio as Administrator, you may get the following error when you try to debug:

“HTTP could not register URL http://+:1108/. Your process does not have access rights to this namespace (see http://go.microsoft.com/fwlink/?LinkId=70353 for details).”

The process that listens on the HTTP address needs administrator access, or permission to reserve the URL. Either run the code as administrator of follow the directions to get proper permissions here:

Configuring HTTP for Windows Vista

The sample application is a simple (!) queue manager, where items get queued up for processing via one timer, and then get processed via a separate timer. The issue is that some items seem to get processed much later than expected. Maybe this isn’t an issue itself, but users (or developers) need insight into when and why this happens so a compensating action can be taken.

This was put together very quickly to demonstrate this technique, and should not be held up as a standard of good code (e.g. I’m pretty sure it’s not thread-safe):

public class OrderProcessor { private readonly Queue<string> _items = new Queue<string>(); private readonly Timer _addTimer = new Timer(); private readonly Timer _removeTimer = new Timer(); private readonly Random _random = new Random(); private int _itemCounter; public void StartProcessingItems() { _addTimer.Interval = 1000; _addTimer.AutoReset = true; _addTimer.Elapsed += AddElapsed; _addTimer.Start(); _removeTimer.Interval = 1000; _removeTimer.AutoReset = true; _removeTimer.Elapsed += RemoveElapsed; _removeTimer.Start(); } private void AddElapsed(object sender, ElapsedEventArgs e) { _items.Enqueue("Item #" + _itemCounter); _itemCounter++; } private void RemoveElapsed(object sender, ElapsedEventArgs e) { if (_items.Count > 0) { string item = _items.Dequeue(); if (!IsReadyForProcessing(ref item)) { //add it back into the queue _items.Enqueue(item); } } } private bool IsReadyForProcessing(ref string item) { const string notReadyMessage = " is not ready for processing because it failed Validation!"; if (_random.Next(1, 5) == 1) { if (!item.Contains(notReadyMessage)) { item += notReadyMessage; return false; } } return true; } }

I also need to add two lines to my Program class, a public static variable to hold an instance of the OrderProcessor:

public static readonly OrderProcessor _orderProcessor = new OrderProcessor();

And code to tell it to start processing. This can really be anywhere in Main() before the Console.ReadLine() call:

_orderProcessor.StartProcessingItems();

If we run this as-is, the processing will not be able to keep up with the number of items that are being put in the queue, as the two timers run on the same interval and some items get returned to the queue. You’ll have to use your imagination to envision a more complex case, as clearly a bit of logging code would suffice to help understand what is going on in this simple example.

To expose the state of the queue at the class level, I added the following code to the OrderProcessor:

public List<string> GetQueuedItems() { return _items.ToList(); }

I would again caution that if this breaks the natural encapsulation of your design, you probably just want to use this as a temporary measure while debugging, and remove it before it goes to final QA testing and production.

Finally, to expose this functionality to the outside world via http, I add a very simple controller that calls the above method:

public class ApplicationStateController : ApiController { public List<string> GetItems() { return Program._orderProcessor.GetQueuedItems(); } }

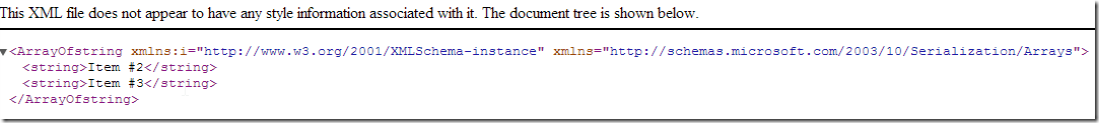

When I run the app and navigate to the URL that represents the ApplicationStateController (http://localhost:1108/api/ApplicationState), I can immediately start seeing the current state of the queue.

If I wait a little longer, I can start seeing the items that are getting processed out of order, which show up randomly:

Because the output of the service is human-readable, I can easily see that Item #39 is currently queued to be processed out of order, I can see the exact reason why.

Conclusion

With this new functionality, we were easily able to root out the cause of the problem—a race condition that was the result of slow/overburdened hardware in the test environment. Although the system we were troubleshooting was much more complex than the sample shown above, the technique was identical, and involved exposing current state in a few key places. This technique should not take the place of other means of rooting out problems, like logging, code reviews and normal debugging, but in cases where simply knowing the exact current state of the system in real time can shed light on a problem, I find this approach to be infinitely useful.